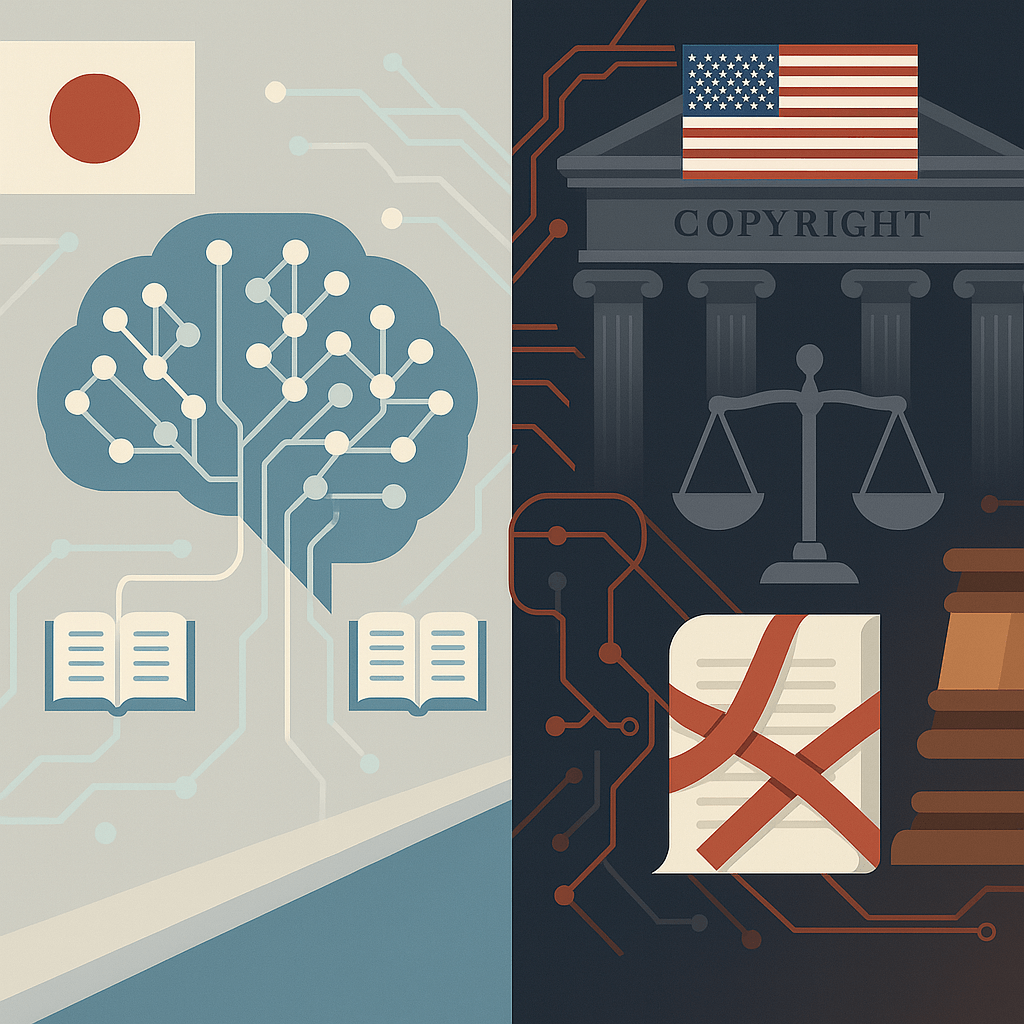

Is It OK To Train AI With Pirated Copyrighted Books?

I have been following two court cases in the US about big-tech companies using pirated material to train AI. These cases are interesting because they are the first of their kind and will definitely be used as precedents which will determine how we train our AI (a.k.a Large Language Models or LLMs) in the future.

Disclaimer: As someone working directly with LLMs and AI, I'm sharing my thoughts here. Please keep in mind that I'm not a lawyer, and this isn't legal guidance.

Background Of The Cases

The two court cases are

- Kadrey vs. Meta Platforms, Inc.

- Bartz vs. Anthropic

Authors of books are suing Meta and Anthropic because they alleged that the tech companies are using their copyrighted books to train their large language models (LLMs). During the proceedings, it was clear that these companies are actually using pirated versions of copyrighted books to train their LLMs. They don't even try to deny it! On the other hand, the tech companies are defending themselves by saying that "It's OK for them to do so".

To us laypeople, we would most probably find that unbelievable and the tech companies did something wrong right? Well, at least I did.

But in separate rulings this week, the judges dismissed copyright infringement claims against Meta and Anthropic, yet both decisions came with significant caveats that may actually provide a road map for future successful challenges.

The Differences Between Meta's And Anthropic's Cases

My perspective on these cases leading up to this analysis was informed primarily by two sources: a piece from NPR by Chloe Veltman, and a particularly insightful summary in Diginomica penned by Chris Middleton.

Meta's Case (Judge Chhabria):

- The Problem: Authors (including Sarah Silverman, Ta-Nehisi Coates) sued because Meta trained AI on their books without permission

- What Authors Failed to Prove: They couldn't show specific financial harm or that Meta's AI was directly competing with their work

- Judge's Reasoning: "You haven't proven this hurt you personally or your book sales"

- The Smoking Gun: Judge found Meta deliberately chose pirated books over paying for licenses after CEO Mark Zuckerberg was involved in the decision

- Judge's Warning: Strongly suggested future cases could win by proving market harm

Anthropic's Case (Judge Alsup):

- The Problem: Three authors sued Anthropic for training Claude AI on their copyrighted works

- Judge's Reasoning: Focused on "transformation" - argued the AI learned from books to create something completely different, like a student reading to become a better writer

- Judge's Warning: Judge said this would be "a different case" if authors could prove the AI was making direct copies or knockoffs

Using Simon Willison's llm tool, I got gemma3:12bto write me something that a layperson can understand based on the points above:

Meta's case was like a student who got caught cheating by using stolen textbooks instead of buying them, but the teacher couldn't punish them because the original textbook authors couldn't prove their grades suffered as a result. The judge basically said "this student probably did something wrong, but you haven't proven it hurt you."

Anthropic's case was like a student who read lots of books (legally or illegally) and then wrote original essays. The judge said "reading books to learn how to write is okay, even if you didn't pay for the books" - but warned that if the student was just copying paragraphs directly, that would be different.

The Bottom Line: Meta lost on worse facts (deliberately using pirated content to save money) but won on a technicality. Anthropic won on a more substantive argument about "learning vs. copying" but with a big warning about what would happen if they were actually copying content directly.

While Meta escaped responsibility due to a technicality because the plaintiffs failed to demonstrate enough harm, the judge strongly disapproved of the company’s training methods and criticized its intentional use of illegally obtained content to avoid paying licensing fees.

Meanwhile, Anthropic won its case based on the idea that its use of the content was transformative. However, the judge warned that this argument would fail if the plaintiffs could prove the AI was creating exact copies or close substitutes. These cases haven’t settled the legal questions about how AI is trained; instead, they're highlighting the specific arguments that could be used to question the industry’s current practices. This gives nearly 50 other pending lawsuits around the world a clearer direction.

I'm not going to go into a legal discussion on the merits of the case, but I want to focus more on how I think this will affect us who uses AI to do our work.

I think there are three main things that these verdicts show us

- Companies developing LLMs need to think twice how they are training their models to give themselves an edge.

- Large models might not mean much anymore and we will be better off using smaller but specialized models trained using data that we own.

- We really need to figure out how to compensate copyright owners on their work if we want to use their work in our LLMs.

Japan's Article 30-4

Now, lets look at Japan. Here, we have Article 30-4 (著作権法 第30条の4) which was amended in 2018:

第30条の4(著作物に表現された思想又は感情の享受を目的としない利用) 著作物に表現された思想又は感情を自ら享受し又は他人に享受させることを目的としない場合には、その必要と認められる限度において、いずれの方法によるかを問わず、当該著作物の利用をすることができる。 ただし、当該著作物の種類及び用途並びにその利用の態様に照らして著作権者の利益を不当に害することとなると認められる場合には、当該著作物の利用をすることができない。

There are two important things to keep in mind:

- Whether you're using material that’s protected by copyright just for fun.

- Taking unfair advantage of the rights that belong to the person who created the material.

As I understand it, Japanese law doesn’t make a difference about how you got the material. It doesn't have the same idea of “fair use” that you find in the US.

Basically, if you’re using material protected by copyright to teach your AI without doing it for your own enjoyment (like reading a book or watching a movie) and your experiments and research don’t threaten the rights and income of the creators (for example, by creating something very similar that makes their work less valuable) while being absolutely necessary, you’re probably allowed to go ahead.

Of course, it’s not quite that simple. Agreements with publishers and other legal commitments related to the material still apply. Just because Article 30-4 doesn’t say anything about how you found the training data doesn't mean you can just take copyrighted books from a library; that would be theft and a crime.

Source: 改正著作権法 第30条の4解説:AI・ビッグデータ時代の著作権法

To Summarize

Considering Japan’s Article 30-4, training AI models in Japan could make it easier to get the data needed. This law allows training on copyrighted material, as long as it's used for research and analysis and doesn't unfairly harm the rights of the copyright owners.

However, my earlier points about using smaller, specialized AI models and finding ways to pay creators are still important. Research shows that the improvements we see from AI models often get smaller as they get larger. This suggests there's a limit to how much we can achieve with very large AI models.

Finally, whether we can legally use copyrighted material to train AI without paying creators, I still think we should find a way to compensate them. We don’t want our AI to make it less appealing for people to create new works. Machines can only copy what humans have already made; they can’t truly imagine or invent new things: Machines can only mimic and never truly understand what they "create".