Stay Connected with AI, Off-line And Free: The Magic of Llamafiles

Stay Connected with AI, Off-line And Free: The Magic of Llamafiles

This will be a slightly technical post. I understand that most of my readers have business-related roles so I will not go deep into how everything works technically, but I just wanted to share with you something which I have found useful and also that I think will be one of the possible futures we will see regarding how we use AI.

Not Only ChatGPT

I'm pretty sure a majority of you reading this know and also have used ChatGPT by OpenAI. ChatGPT has done a great job at opening up the subject of AI to us, but do you know that ChatGPT is not the only "AI" based chat tool that you can use? There are other alternatives out there, such as Google's Bard, Microsoft's Copilot and also Claude by Anthropic.

All of the above AI tools have one thing in common though: They are online and you need to access them through your internet.

Do you know that you can even use an AI tool that helps you summarize and write stuff similar to ChatGPT even without an internet connection?

Well, I read Simon Willison's blog. Simon is an active member of our Python community and he is also currently serving on the board of the Python Software Foundation (PSF). He has been actively experimenting with AI technologies, especially on the different open-sourced models and also building tools to make using AI easier for the rest of us.

One llamafile To Rule Them All

In particular, I would like to introduce this post he made in November 2023. This post introduces what is called a llamafile.

So what's so great about a llamafile? A llamafile allows us to distribute Large Language Models (LLMs) which you can use offline in a single file that runs across many different platforms using the same file without installing anything.

A llamafile is like a big, all-in-one package for running AI programs on your computer. It has everything you need inside: the AI's "brain" (which we call model weights), the LLM and the instructions for how to use it. It even includes its mini website that you can use to interact with the AI directly from your web browser. The LLMs used are open-source LLMs such as Mistral.

If you've been using computers for some time, I think you can appreciate how difficult it is to do something like that. For one, distributing LLMs in just one file is one big feat in itself, and making that executable on multiple different platforms like Windows, Mac or Linux without installing any prior software is just plain amazing.

Using llamafile

Download the llamafile from Huggingface here (WARNING: it's around 4.3GB in size).

Once downloaded, you can open up a terminal, make it an executable and just run it like

iqbal [~/llama]$ ./llava-v1.5-7b-q4.llamafile

I'm running this on Ubuntu Linux, but if you're using Windows, you might find this article useful.

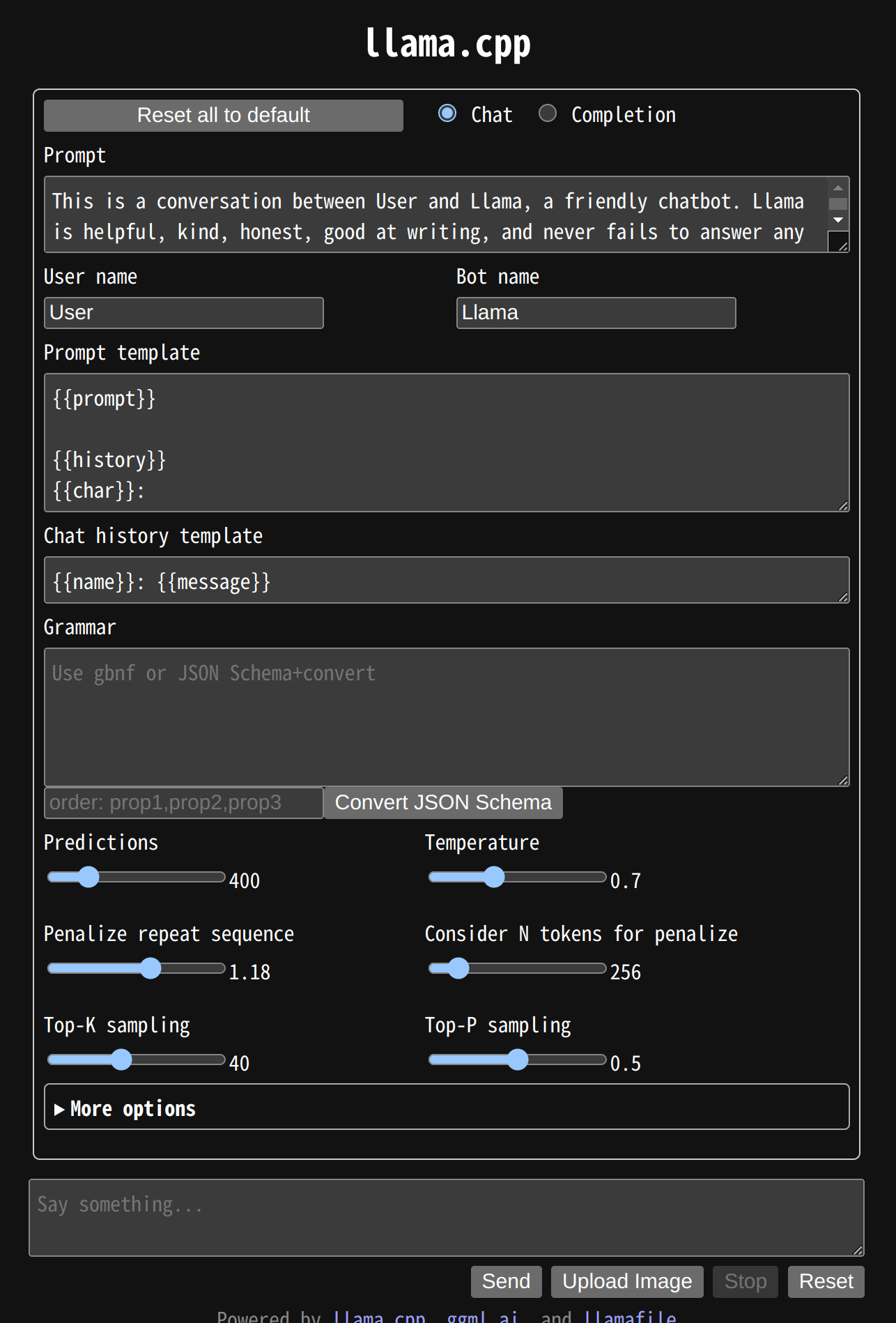

Once you run it, you can access it through your browser at http://127.0.0.1:8080/ and this is what you'll see:

It's OK to just leave the default parameters as it is. If you feel adventurous, you can try changing them and see the results for yourselves.

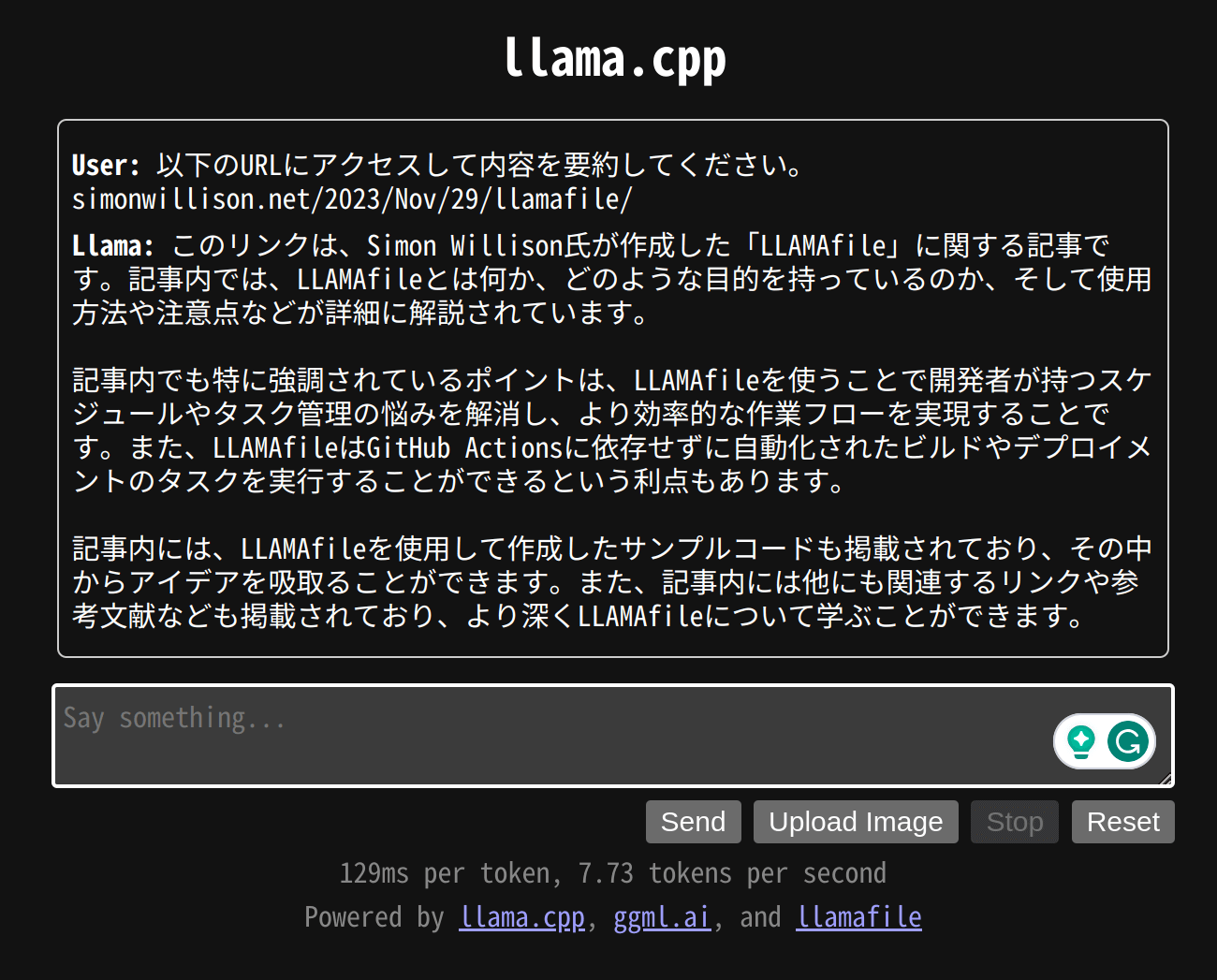

I tested it by asking it to access Simon's blog post and summarize it for me, in Japanese and this is what it gave me:

Pretty impressive. On my Dell X13 9310 laptop which I bought at the end of 2021 with no GPU, as you can see above it generated 7.73 tokens per second. This number just shows how "fast" it can generate answers for you. The bigger your memory and CPU are, the higher this number will be.

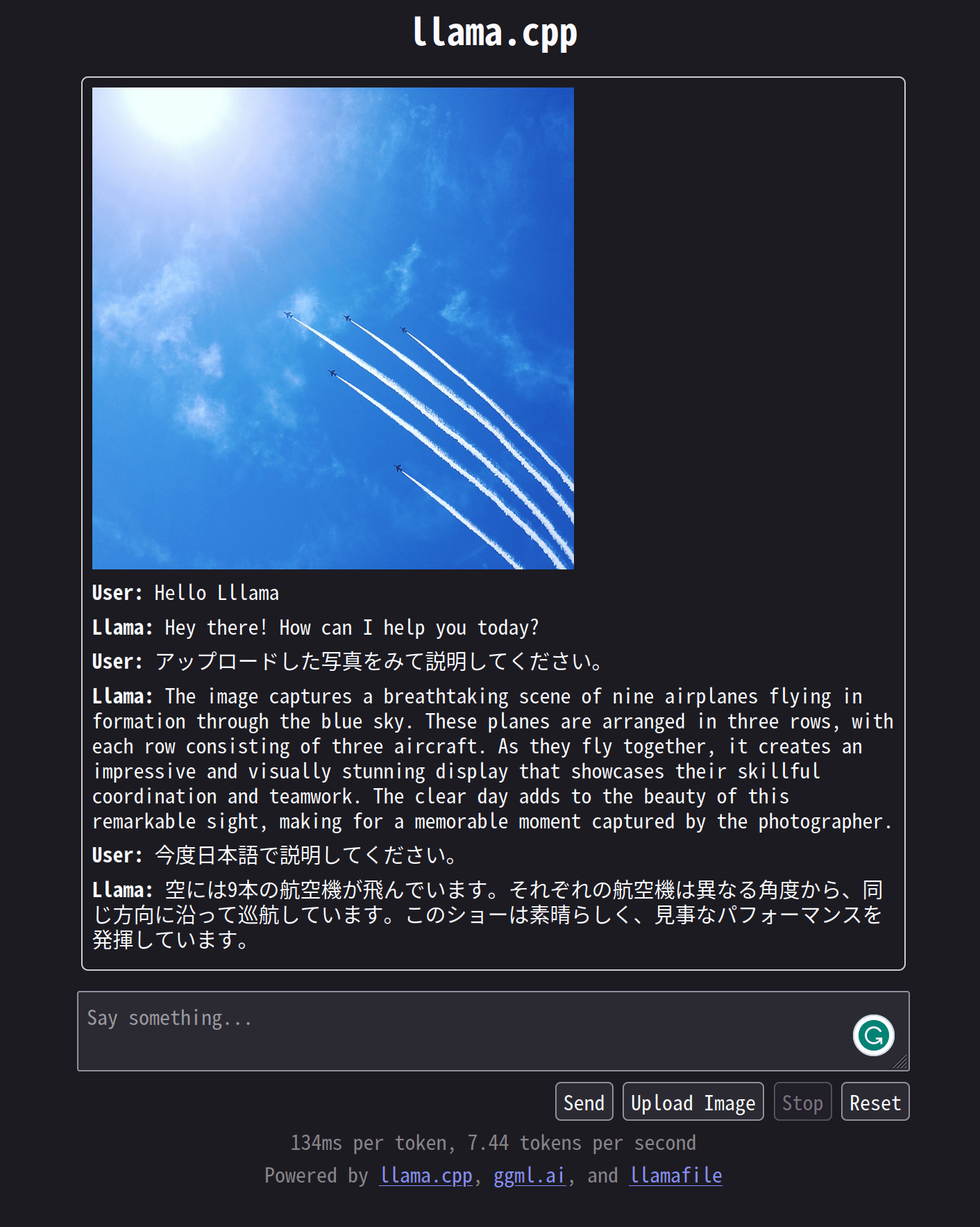

I also tried uploading a picture and asking for a summary. Even though I asked it in Japanese, it returned the summary to me in English, albeit with a correct summarization of the picture.

Wrapping Things Up

I've shown you an example of running an LLM on your computer without using online tools such as ChatGPT or Copilot. If you're an engineer and work with software, I'm sure you'll appreciate the amazing work many people have put into this to make it work.

But what does that mean for the rest of us? I did not mean this post as a technical explanation of the nitty-gritty details of how the different parts work together to make running LLMs on your computer work.

But I hope it has impressed upon you the speed of innovation and how much work is being done to bring the power of LLMs (and within that course, also AI) to the rest of us in an open way, accessible to anyone interested. In other words, democratizing it.

With these developments, I think we will at least see these two happening within the next year or two

Lowering Of Costs

- Open-Source LLMs: With the advancements and development happening in the open source LLMs space, there are now alternatives to proprietary LLMs such as GPT-4 which is being used by ChatGPT.

- Increased Competition The emergence of open-source LLMs introduces healthy competition. As more accessible alternatives become available, proprietary LLM providers may need to adjust their pricing strategies.

The Separation From Large Providers

- Monopoly Disruption Proprietary LLMs have allowed some companies to dominate the AI landscape. Open-source alternatives disrupt this monopoly by providing accessible alternatives. Monopolistic practices, such as controlling data access or pricing, may face scrutiny as open-source LLMs gain prominence.

- Business Models Evolve Companies may shift from selling LLM licenses to providing value-added services around open-source models. Customization, support, and integration become crucial. Monetization strategies may move from direct licensing to subscription-based services or consulting.

- Strategic Alliances AI platform companies may collaborate with open-source communities. Hybrid models combining proprietary and open-source components could emerge. Strategic partnerships can enhance innovation and address market needs.

Kafkai doesn't use llamafiles, but I do use it for tasks such as summarizing short articles and re-writing some of my work especially when I don't have an internet connection like on a plane. At the very least, these types of innovation and improvements are what make working within the AI industry fun and interesting.